Objective:

Several actuators were returned from the field due to a failure caused by the cylinder not being bored deep enough to allow full piston retraction. This defect should have been identified during testing, so we needed to determine how and where the issue was missed.

Solution:

Review and compare the recorded test data for every actuator shipped over the past five years, including test stand output, associate ATR sheets, and customer acceptance test data.

Tools/Skills Used:

Excel VBA Automation, Cross-Team Investigation, Collaboration with Internal Teams and External Partners

Approach:

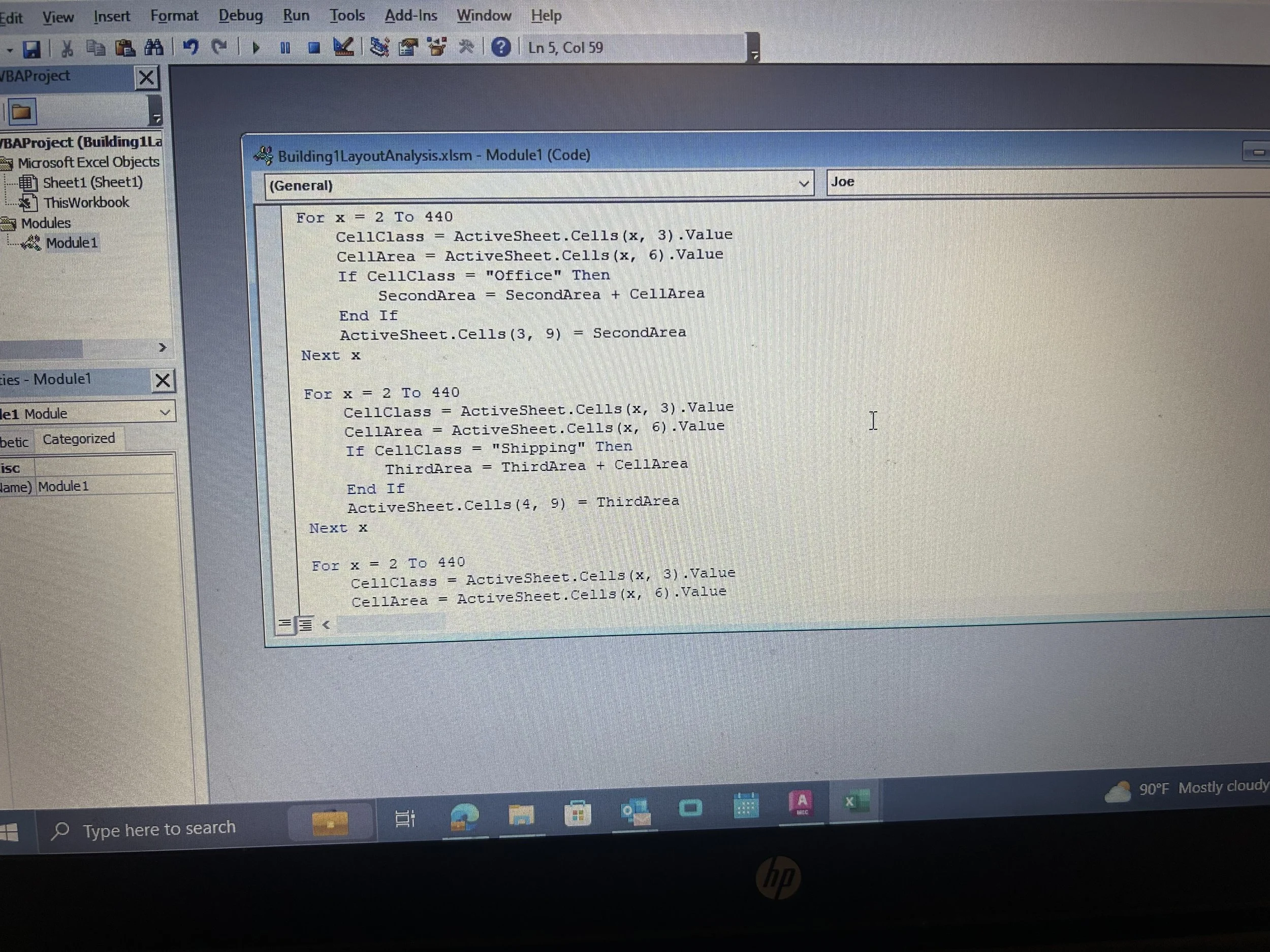

Consolidated all three data sources into a unified Excel dataset, then developed a VBA script to automatically compare values and flag any inconsistencies between recorded results.

My Role:

Gathered and organized the historical data with the co-op team, then wrote the VBA script that identified mismatched measurements and highlighted potential suspect parts.

Results/Impact:

Identified three additional actuators in the field that required corrective action and had them returned for rework. The investigation also uncovered data truncation practices and the ability to overwrite test stand values, both of which were corrected through associate retraining and software modification to prevent future data manipulation.

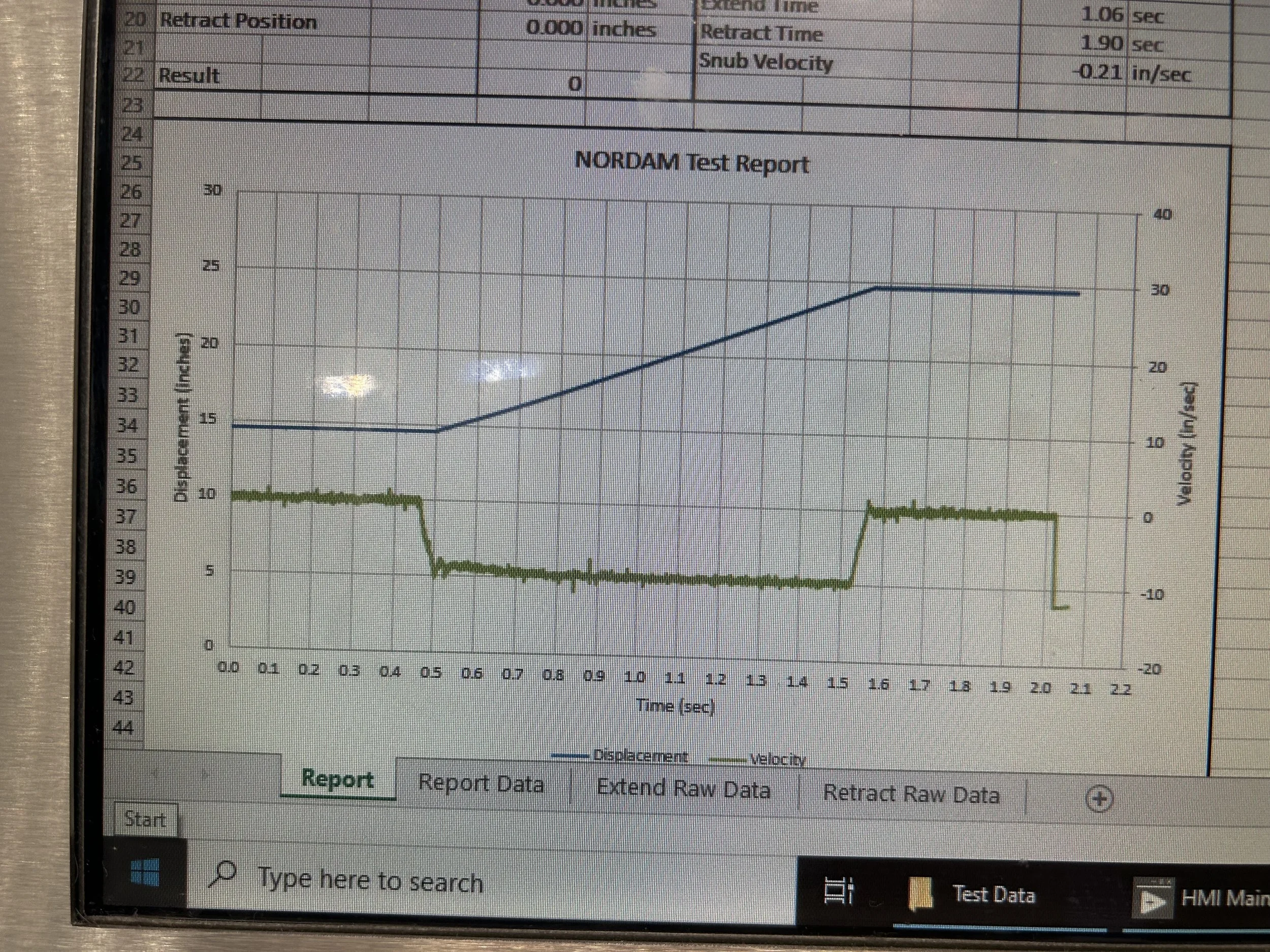

This project was one of the longest projects that I undertook during my experience at NMG Aerospace. During testing, several hydraulic actuators had passed all required checks, yet began failing once they were in the field. When one was returned and disassembled, we discovered that the cylinder hadn’t been bored deep enough for the piston to fully retract. Because this issue was not caught earlier, we needed to determine how widespread the problem might be.

The challenge was that five years’ worth of actuators had been shipped to major aerospace clients, so the potential impact could be significant. We needed to cross-check multiple data sources to identify which units might share the same machining issue. Each actuator had three sets of recorded measurements: the automated test stand output, the associate’s handwritten ATR sheet, and the customer’s incoming test data. In each piece of data, there were 10 data points we had to capture. Any mismatch between any of these could indicate a part that passed testing incorrectly.

Through this automated analysis, we identified three additional actuators already in the field that were likely affected and arranged for them to be returned before failure occurred. We also discovered that some associates had been taught to truncate measurements to fit them into acceptable tolerance ranges. Additionally, the test stand software allowed data to be overwritten, meaning failing data could be replaced with passing data after repeated trials. These insights revealed that the issue extended beyond machining—it involved training and software controls.

To prevent these failures from happening again, we implemented several corrective actions. We retrained associates to record measurements exactly as displayed, without rounding. We also worked with our external software partner to remove the data-overwrite feature in the test stand system. These changes ensured that future testing results could not be altered and would accurately reflect true actuator performance.

Hydraulic Actuator Data Integrity Analysis

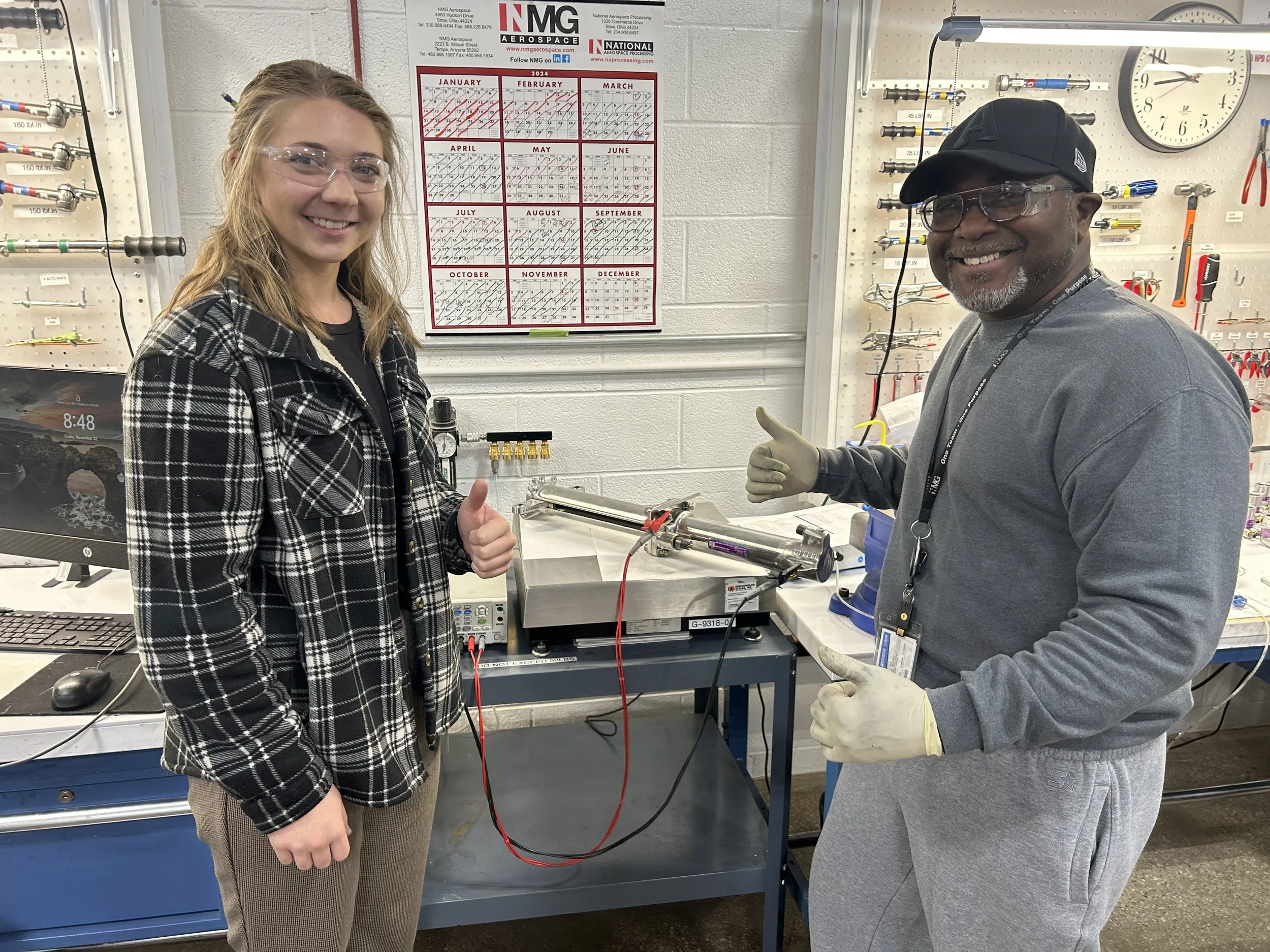

I utilized the help of other co-ops, manufacturing engineers, and quality teams to gather every available dataset. The information came from different systems, formats, and record-keeping habits, so the first step was organizing it into one central Excel file. This required tracing individual actuator serial numbers across multiple logs and confirming that all entries were referring to the correct units. Only after everything was aligned could we begin meaningful analysis.

Once the data was consolidated, I wrote a VBA script to automatically compare the test stand values, operator-recorded values, and customer-recorded values for each actuator. Instead of manually checking thousands of data points, the code highlighted any inconsistencies instantly. This allowed us to detect where numbers had been changed, rounded, or overwritten. It essentially became an automated audit of our testing integrity.

This project was the first time I got to utilize the skills I learned in school outside of the classroom. I had purely learned Excel VBA the semester prior, alongside other coding languages. This one ended up being one of my strongest ones, which I thought was a bit silly at the time because I had believed this language was obsolete and there wouldn’t be really any use for this in the future. Little did I know a 3 month long project would rely entirely on this,

In the end, this project not only prevented defective actuators from remaining in operation but also strengthened our testing reliability moving forward. It reinforced the importance of data integrity and process consistency across both people and equipment. It also showed me the value of approaching engineering problems with both analytical rigor and practical teamwork. The solution was not a new tool, but a clearer way of understanding and validating the data we already had.